When presented with a traditional deployment question for the web, such as “how do I deploy an API?” or “how do I host a static site?”, there are now a lot more interesting, flexible and cost effective solutions than there were just a year or two ago.

When I started out building software, there were only a limited number of answers to questions like these. Generally they would involve obtaining a server (either a VPS in someone elses data centre or in an office broom cupboard), installing Windows + IIS (or Linux + Apache) and managing the whole stack, right down to the OS.

Fast forward a few years, however, and the situation is a lot more interesting! The answer to such questions is now, usually, “it depends”.

Much has been written about PaaS offerings like AWS Lightsail and Azure App Service, and more recently “serverless” solutions like AWS Lambda and Azure Functions have graduated from buzzwords to solid, mature platforms.

What I’d like to talk about today, however, is a technology (and methodology) that I believe has the potential to completely revolutionise how we think about building, deploying and managing web services.

Cloudflare Workers are lightweight Javascript functions that sit between the client and server, and can modify or re-route a HTTP Request and Response in flight, make sub-requests, and continue to run longer-running tasks after the request has been fulfilled, all using the Javasript Service Worker API. If you’re already using Cloudflare for their DNS, caching or SSL services, then requests to your origin (wherever that may be) are already being routed through their infrastructure, meaning the barrier to entry is very low.

Some intriguing possibilities…

There are some great blog posts and ideas on Cloudflare’s own developer documentation about how to use a service like this to the full, but there are two specific use-cases that I’m most excited about (at the moment, I keep thinking of new ideas!).

The first is the ability to implement super light-weight API gateways that can aggregate multiple back ends spread across different providers, without having to resort to heavy-weight API Gateway products like Azure API Management or Kong.

The second, and I think perhaps most interesting, is that this technology opens up the possibility of not having any origin at all…..

Now, I know that the irony of the term “serverless” is that there are just as many (if not more..) actual, physical servers involved in the task of fulfulling a serverless request as there are in any other case. And of course, a request/response cycle always has an origin..

…that being said, not having to even think about what that origin is, or where it might be located, is a liberating idea. With a technology like Cloudflare Workers, you can intercept a request in flight, build a response, pull in static content from other CDNs and then send back a response to your client, without ever hitting a traditional origin server. Everything happens inside Cloudflare’s “edge”, the crazy-fast network layer that we’d normally associate with DNS routing and caching.

You can also completely ignore the question of just where on the planet your code is running. The answer is, it runs everywhere. Cloudflare automatically copies your Worker code across their global datacenter network (currently around 160 strong), so if you’re fortunate enough to have a service used by people all over the world, your code is always executed right on their doorstep.

Pretty impressive.

A Simple Example

Workers use the Service Worker API and “fetch” event to intercept requests in flight and give you the tools to modify them and perform whatever logic you might choose. Once you’ve added a Worker to your domain, out-of-the-box you are given a basic Worker that grabs a request but then passes it on without interruption. You can then build on this example to create something of your own.

For quite some time now the website for my consultancy, Morrotec, has been a Craft CMS site that never got the attention it deserved, and so was stopped, showing an ugly “this site is stopped” 503 message. I’ve recently had some new branding created, and while I was waiting for the new landing page designs, I thought it might be nice to serve something to visitors of morrotec.co.uk.

This seemed a perfect opportunity to test my origin-less static content idea, so I adapted the standard Worker to achieve this.

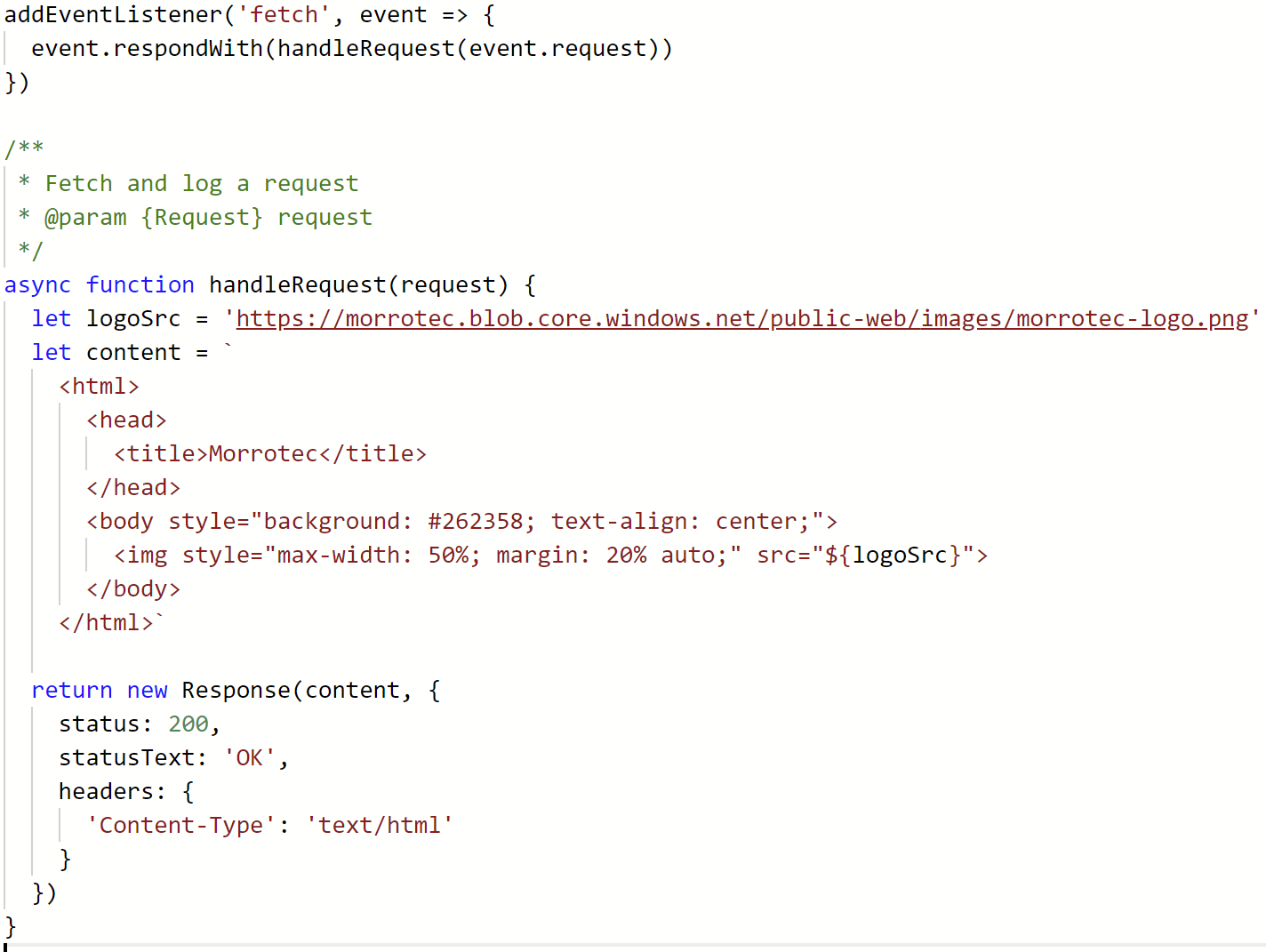

The first piece of the puzzle is the addEventListener() method. This can take a number of Worker lifecycle events as a first parameter but the one we’re interested in is “fetch”, which fires when a request activates the Worker. Pass in a handler for the event and attach an action to the event’s .respondWith() method and we can then inspect, modify or respond directly to the request, as well as making sub-requests out to other services.

The Cloudflare example just takes the request and passes it on without interruption using the Fetch API. For my little static content idea I built up some HTML, pulled my new logo from an Azure CDN, and then created a new Response object, with the appropriate headers and the HTML as body. I then returned this to the client, without passing on the event to an origin.

In order for this to work when a client hit morrotec.co.uk, I had to set a Route that activated the Worker. This simply involved opening the “Routes” tab in the Worker editor, and adding a route for morrotec.co.uk/*. You can also add routes that disable the script, useful perhaps if you have an /admin route that needs to pass through unmodified.

All nice and simple, and done within 15 minutes. This gave me a simple, branded holding page that replaced the horrible old 503 “stopped website” message we had before. Super fast loading time (39ms as tested) and Cloudflare automatic SSL were a nice bonus.

This is of course, a very, very simple example, but it shows the ability to capture an incoming request and modify and/or respond on behalf of an origin, opening up a huge amount of possibility.

Two Flies with One Swatter

The beauty of serverless products like AWS Lambda or Azure Functions is their on-demand nature, the ability to scale up almost infinitely and back down again when not needed. This brings with it cost savings (you’re only paying while your code is running) and some fun use-cases like queue & event processing, timed tasks and webhooks.

On-demand scaling is made possible by the capability of the underlying platform to spin up new compute resources as needed, copy your code from storage, then run it. Repeat as needed to achieve (theoretically) infinite scale.

Pay-per-execution is possible because as soon these resources are no longer needed (or after a cool-down window, say 15 minutes), your code is removed, the resources de-allocated, and then re-allocated to another customer.

It is this ability to spin down to zero and de-allocate that brings with it the down-side of traditional serverless - the “cold start”.

Cold starts occur when your function has been de-allocated due to inactivity, and then must be re-allocated, warmed up and your code copied in from storage. This can lead to a long lead time when invoking a function that has been idle for some time. When I say “long”, I mean genuinely long, in human terms - sometime tens of seconds. For HTTP triggers, in particular, this can present a major user experience problem.

It isn’t so much a problem for systems that have constant load and activity (the resources may never be de-allocated), and it disappears for the second request after a cold start. However if there are fairly long gaps between function invocations, then it can become something of a headache.

With this in mind, Cloudflare have been very smart in their implementation of Workers, eschewing the platform fabric used by Microsoft and Amazon, and instead opting to use a similar technology to that used in the browser to create and run multiple web apps in isolation. This technology brings with it code isolation (I can happily run my code right next to that of another, unknown customer), security (potentialy malicious code running next-door cannot affect mine) and cleverly solves the cold-start problem (how long does it take to open a new tab and download + run another web app?).

Some Gotchas

The drawback I’ve found is that only one Worker can be added per domain (I believe this restriction is lifted for Enterprise subscriptions), and the Worker responds to a specific route. So, for example, if I manage morrotec.co.uk with Cloudflare, then I can add only 1 Worker, and this Worker is activated by a specific route. This route can be a wildcard, so all requests made to morrotec.co.uk could activate the Worker.

Compare this to, for example, an Azure Functions app behind a Functions Proxy, where I can have multiple, isolated functions behind a single domain - a serverless API, essentially - and the limitations of the Worker model as a true Functions/Lambda replacement start to be seen. This is not a limitation of the technology, as far as I can tell, and I’m hopeful that Cloudflare will remove the Worker-per-domain restriction for lower pricing tiers in future. This would really open up the platform to be a true replacement for Azure Functions or Lambda.

In the meantime, within your Worker you can inspect the request and perform route-matching on the URL and verb-matching on the method, so could easily run specific tasks per route or even implement an API. There’s an interesting project here that allows Express JS style routing for more exotic applications.

I’ve got lots more ideas to try with Workers, and excited to see the novel uses it can be put to. I’ve got a client project in mind for the API Gateway mentioned earlier, so will write more about that soon.

The possibilities of this are endless. Have fun!